Visual Prompt Tuning

Can you transfer prompts? What is the best place to append prompts? Do they increase the adversarial robustness? Find out here :)

Introduction

This project, completed for the Introduction to Deep Learning course, focused on Visual Prompt Tuning (VPT) in Vision Transformers (ViT)

Key Concepts

Vision Transformer (ViT): A neural network architecture that applies the transformer model, originally designed for natural language processing, to image analysis tasks.

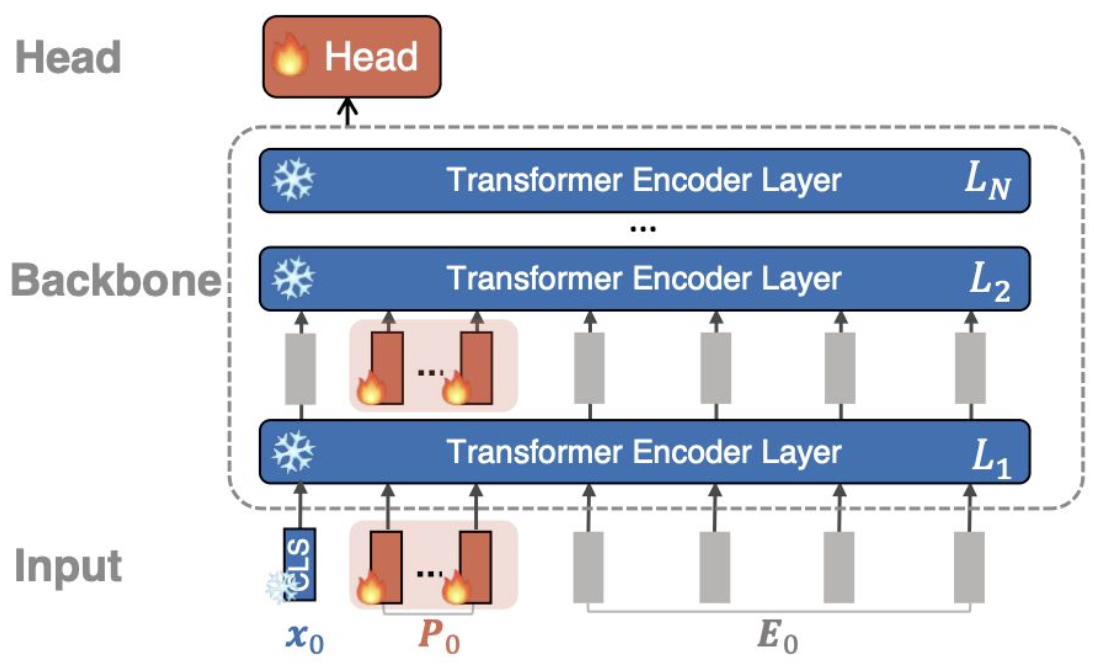

Visual Prompt Tuning (VPT): A technique that uses continuous vectors in the embedding or pixel space. It involves a frozen transformer backbone and task-specific prompts that are updated during training.

Research Focus

Our project explored various aspects of VPT through several experiments and ablation studies:

- Prompt Placement

- Prompt Size

- VPT-Deep Layer Depth

- Adversarial Robustness

- Transfer Learning

Experiments and Results

Prompt Placement

We compared three different approaches for prompt placement:

- Prepending prompts at the pixel layer

- Prepending prompts at the embedding layer

- Adding prompts element-wise to the embedding layer

Results showed that prepending to the embedding layer yielded the best performance.

Prompt Size

We conducted a sweep from 25 to 150 tokens to determine the optimal prompt size. Key findings include:

- Adding prompts significantly increased accuracy

- 50 and 125 tokens showed similar and highest accuracy

VPT-Deep Layer Depth

We investigated the impact of the number of transformer encoder layers to which learnable prompt parameters were prepended.

Adversarial Robustness

We tested the model’s resilience to input noise, demonstrating that adding a prompt universally increased model robustness to noisy inputs.

Transfer Learning

We explored if prompts trained on one dataset (CUB-200) could provide better initialization than standard methods when applied to a new dataset (Stanford Dogs).

Key Findings

- Prepending prompts to the embedding layer yielded the best performance, possibly allowing the prompt to learn condensed characteristics.

- The addition of prompts led to a significant increase in accuracy, with minimal differences across various prompt sizes.

- VPT demonstrated increased robustness to noisy inputs compared to models without prompts.

- Transfer learning experiments showed promise in using pretrained prompts as initialization for new tasks.